Mice and Elephants in my Data Center

Elephant flows

A long-lived flow transferring a large volume of data is referred to as an Elephant flow. Compared to the smaller sized, short lived flows referred to as ‘mice’ and of which we have numerous in the data center. Elephant flows, though not that numerous, can monopolize network links and will consume all allocated buffer for a certain port. This can cause temporal starvation of mice flows and disrupt the overall performance of the data center fabric. Juniper will soon introduce Dynamic Load Balancing (DLB) based on Adaptive Flowlet Splicing as a configuration option in its Virtual Chassis Fabric (VCF). DLB provides a much more effective load balancing compared to a traditional hash-based load distribution. Together with the existing end-to-end path-weight based load balancing mechanism, VCF has a strong load distribution capability that will help network architects drive their networks harder than ever before.

Multi-path forwarding

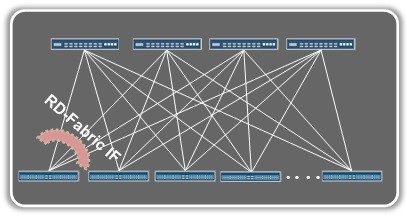

Multi-path forwarding refers to the balancing of packets across multiple active links between 2 network devices or 2 network stages. Consider a spine and leaf network with 4 spines, all traffic from a single leaf is spread across all the links in order to use as much as possible the available aggregate bandwidth and provide redundancy in case of link failure.

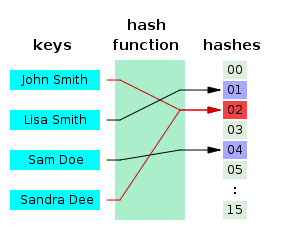

Multi-path forwarding is typically based on a hash function. A hash function maps a virtually infinite collection of data into a finite or limited set of buckets or hashes, as illustrated below.

Image source: Wikipedia (http://en.wikipedia.org/wiki/Hash_function)

Image source: Wikipedia (http://en.wikipedia.org/wiki/Hash_function)

In networking terms: the hash function will take multiple fields of the Ethernet, IP and TCP/UDP header and use these to map all sessions onto a limited collection of 2 or more links. Because of the static nature of the fields used for the hashing function, a single flow will be mapped onto exactly 1 link and stay there for the lifetime of the flow. A good hashing function should be balanced, meaning that it should equally fill all hashing buckets and by doing so provide an equal distribution of the flows across the available links.

One of the reasons to use static fields for the hashing function is to avoid reordering of packets as they travel through a network when paths might not all be of equal distance or latency. Even if by design all paths are equal, different buffer fill patterns on different paths will cause differences in latency. Reordering can be provided by the end-point or in the network, but it always comes at a cost which is why a network ensuring in-order delivery is preferred.

Because of that static nature, the distribution of packets will be poor balanced when a few flows are disproportionally larger than the others. A long-lived, high volume flow will be mapped to a single link for the whole of its life-time and will cause the network buffer of that link to get exhausted with packet drops as a result.

TCP as in keeping the data flowing

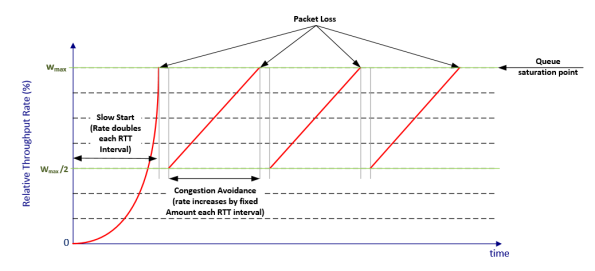

To understand the mechanism of Adaptive Flowlet Splicing, we need to understand some of the dynamics of how data is transmitted through the network. TCP has been architected to try to avoid network congestion and keep a steady flow of data over a wide range of networks and links. One provision that enables this is the TCP window size. The TCP window size specifies how much data can be in-flight in the network before expecting a receiver acknowledgement. The TCP Window pretty much tells the sender to blindly send a number of packets and when an acknowledgement is received from the receiver the sender can slide the window down for the size of one packet for each received ‘ack’. The size of the window is not fixed but dynamic and self-tuning in nature. TCP uses what we call AIMD (Additive Increase, Multiplicative Decrease) congestion control. In AIMD congestion control, the window size is increased additively for each acknowledgement received and cut by half whenever a few acknowledgements were missed (indicating loss of packets). The resulting traffic pattern is the typical saw-tooth:

Adaptive Flowlet Splicing (AFS)

Adaptive Flowlet Splicing (AFS)

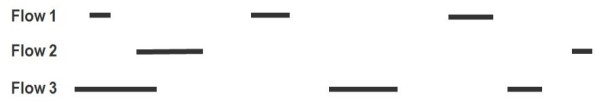

From the above it should be apparent that elephant flows will result in repeating patterns of short bursts followed by a quiet period. This characteristic pattern divides the single long-lived flow over time into smaller pieces, which we refer to as ‘flowlets’. Below picture, courtesy of the blog article by Yafan An [*1], visually represents what flowlets look like within elephant flows when we look at them through a time microscope:

Now suppose that the quiet times between the flowlets are larger than the biggest difference in latency between different paths in the network fabric, in that case load balancing based on flowlets will always ensure in-order arrival.

Now suppose that the quiet times between the flowlets are larger than the biggest difference in latency between different paths in the network fabric, in that case load balancing based on flowlets will always ensure in-order arrival.

To distribute the flowlets more evenly across the member links of a multi-path, it would be good to keep some kind of relative quality measure for each link depending on its history. This measure is implemented using the moving average of the link’s load and its queue depth. Using this metric, the least utilized and least congested link among the members will be selected for assigning new flowlets.

Is this elephant flow handling unique to VCF ?

By all means, no. But the controlled environment and the imposed topology of the VCF solution allows Juniper to get the timings right without having to resort to heuristics and complex analytics. In a VCF using only QFX5100 switches in a spine and leaf topology, each path is always 3 hops. The latency between two ports across the fabric is between 2µs and 5µs resulting in a latency skew of max 3µs. By consequence, any inter-arrival time between flowlets larger than the 3µs latency skew will allow flowlets to be reassigned member links without impacting the order of arrival of packets.

In an arbitrary network topology using a mix of switch technologies and vendors, every variable introduced makes it exponentially more complex to get the timings right and find the exact point where to split the elephant flow into adequate flowlets for distributing them across multiple links.

In an arbitrary network topology using a mix of switch technologies and vendors, every variable introduced makes it exponentially more complex to get the timings right and find the exact point where to split the elephant flow into adequate flowlets for distributing them across multiple links.

Another problem we did not have to address, in the case of VCF, is how to detect or differentiate an elephant from a mice. AFS records the timestamp of the last received packet of a flow. In combination with the known latency skew of 3µs, the timestamp is enough to provide the indicator for the reassignment of the flow to another member link. It is less important for AFS to be aware of the flow’s actual nature.

In arbitrary network architectures however, as Martin Casado and Justin Pettit describe in their blog post ‘Of Mice and Elephants’ [*2], it might be helpful to be able to differentiate the elephants from the mice and have them treated differently. Whether this should be done through distinct queues or different routes for mice and elephants or turning the elephants into mice, or some other clever mechanism is a topic of debate and network design. Another point to consider is where to differentiate between them? The vswitch is certainly a good candidate, but in the end the underlay will be the one that handles the flows according to their nature and hence a standardized signaling interface between overlay and underlay must be considered.

Conclusion

By introducing AFS in VCF, data center workloads that run on top of the data center fabric will be distributed more evenly and congestion on single paths that might be caused by elephant flows will be avoided. If a customer has no needs for a certain topology or for a massively scalable solution, a practical and effective solution like VCF brings a lot of value to their data centers.

References

[*1] Yafan An, Flowlet Splicing – VCF’s Fine-Grained Dynamic Load Balancing Without Packet Re-ordering – http://forums.juniper.net/t5/Data-Center-Technologists/Adaptive-Flowlet-Splicing-VCF-s-Fine-Grained-Dynamic-Load/ba-p/251674

[*2] Martin Casado and Justin Pettit – Of Mice and Elephants – http://networkheresy.com/2013/11/01/of-mice-and-elephants/

This short video introduces ALB (Adaptive Load Balancing) technology from Juniper to address the “Elephant and mice flow” problem in data center networking. The lab demonstration is a must see to understand how powerful the solution works. It’s shipping in Juniper QFX5100 and VCF data center architecture.